Join us at Current New Orleans! Save $500 with early bird pricing until August 15 | Register Now

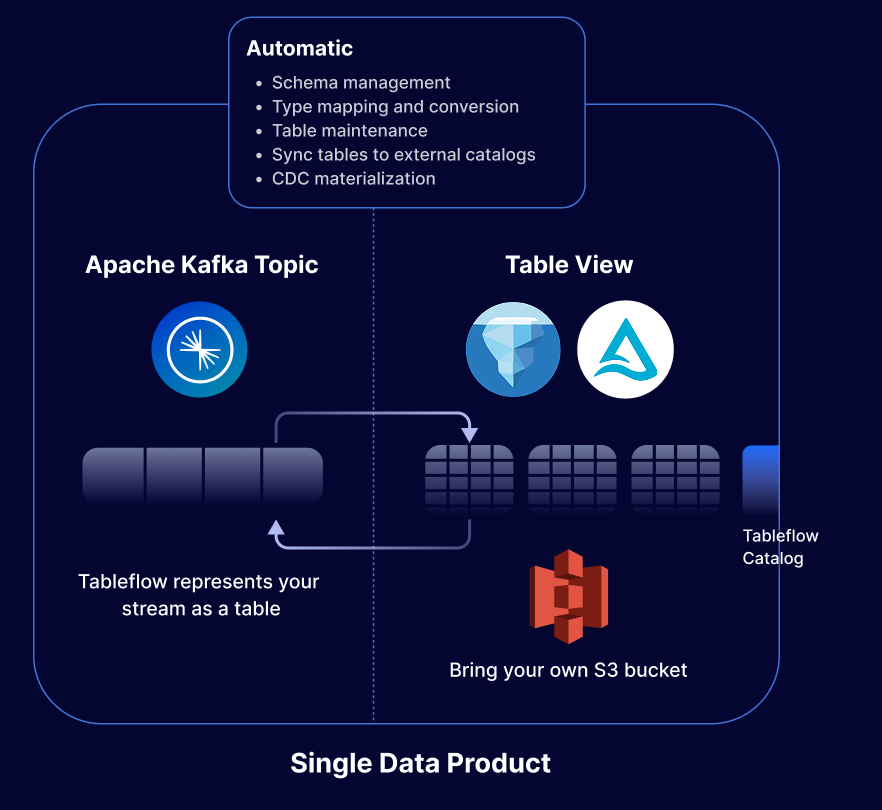

Easily represent Kafka topics as Apache Iceberg® or Delta Lake tables

Represent Kafka topics and associated schemas as open table formats such as Apache Iceberg® (GA) or Delta Lake (OP) in a few clicks to feed any data warehouse, data lake, or analytics engine

Topics to tables with less effort and cost

Simplify the process of representing Kafka topics as Iceberg or Delta tables to form bronze and silver tables, reducing engineering effort and compute costs

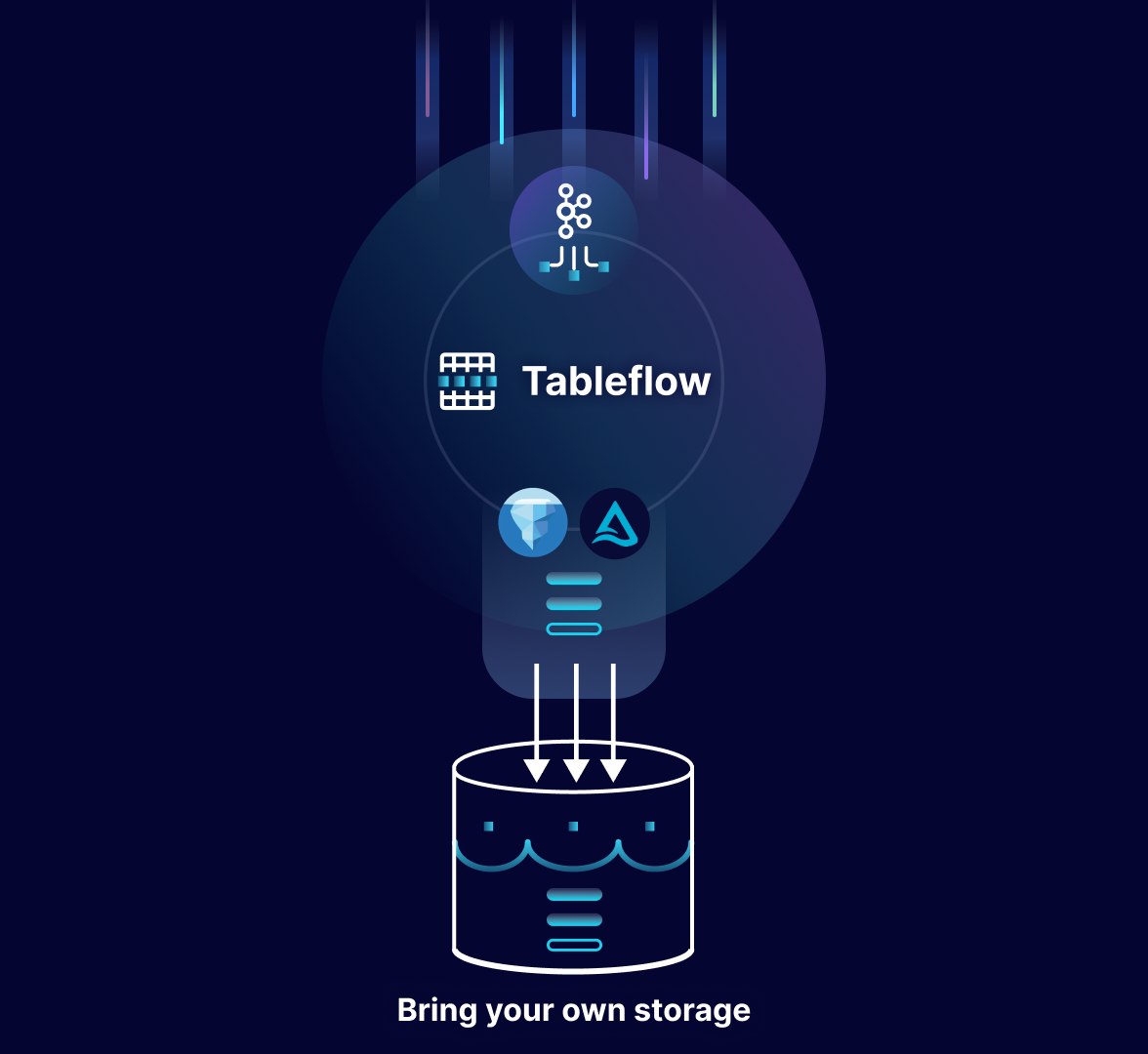

Enhanced flexibility by bringing your own storage

Store your fresh, up-to-date Iceberg or Delta tables once, reuse many times with your own compatible storage, ensuring flexibility, cost savings, and security

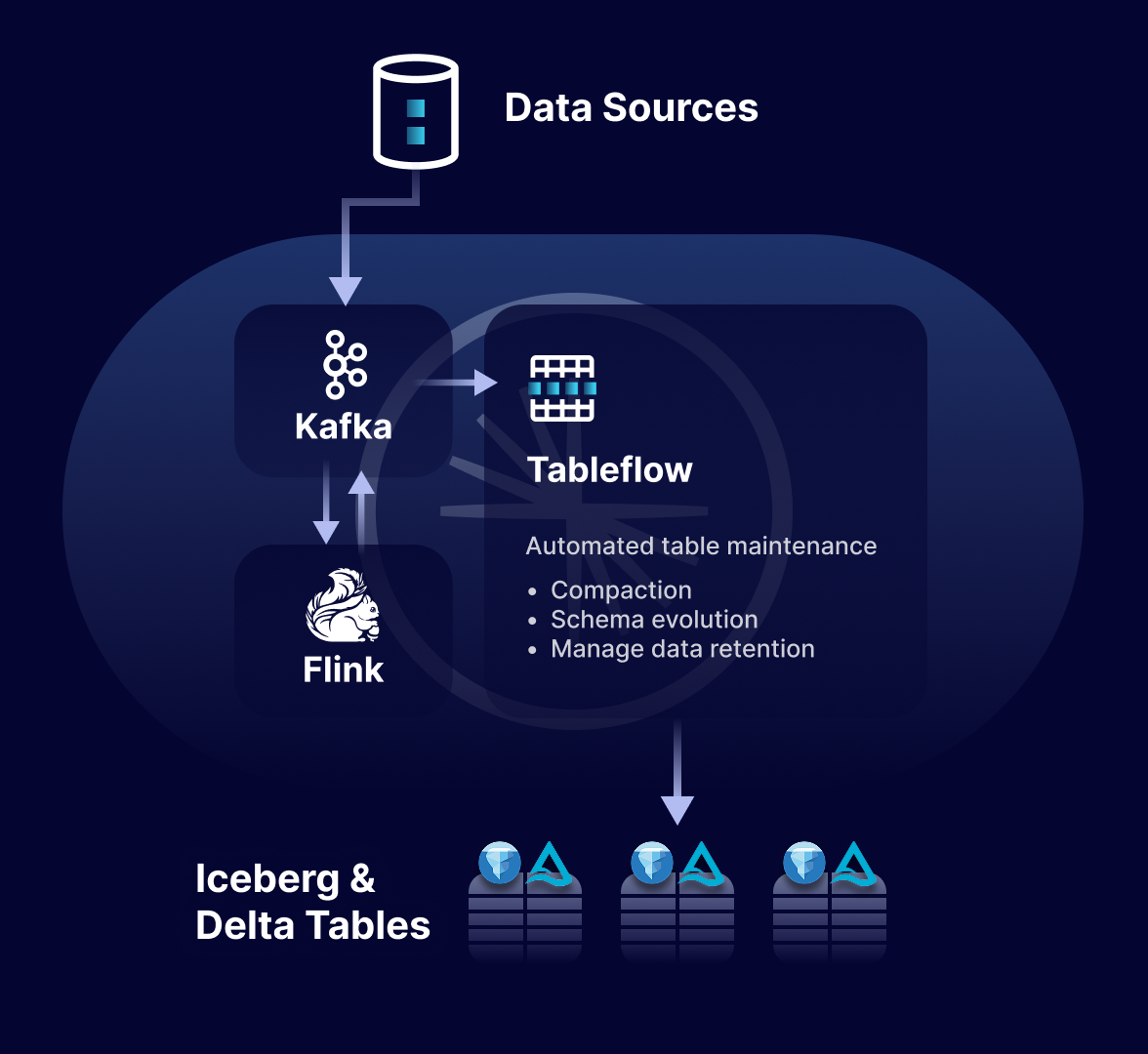

Build gold standard tables with our partner ecosystem

Leverage our commercial and ecosystem partners to transform bronze or silver tables into gold standard tables for a wide range of AI and analytics use cases

Strong read performance & automated data maintenance

Continuously optimize read performance with file compaction, maintaining efficient data storage and retrieval by consolidating small files into larger, more manageable ones

Resources

Get started with Confluent today

New signups receive $400 to spend during their first 30 days.